Pyspark filter

Pyspark filter this PySpark article, you will learn how to apply a filter on DataFrame kathleen widdoes of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples, pyspark filter. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter.

In the realm of big data processing, PySpark has emerged as a powerful tool for data scientists. It allows for distributed data processing, which is essential when dealing with large datasets. One common operation in data processing is filtering data based on certain conditions. PySpark DataFrame is a distributed collection of data organized into named columns. It is conceptually equivalent to a table in a relational database or a data frame in Python , but with optimizations for speed and functionality under the hood. PySpark DataFrames are designed for processing large amounts of structured or semi- structured data. The filter transformation in PySpark allows you to specify conditions to filter rows based on column values.

Pyspark filter

Apache PySpark is a popular open-source distributed data processing engine built on top of the Apache Spark framework. One of the most common tasks when working with PySpark DataFrames is filtering rows based on certain conditions. The filter function is one of the most straightforward ways to filter rows in a PySpark DataFrame. It takes a boolean expression as an argument and returns a new DataFrame containing only the rows that satisfy the condition. It also takes a boolean expression as an argument and returns a new DataFrame containing only the rows that satisfy the condition. Make sure to use parentheses to separate different conditions, as it helps maintain the correct order of operations. Tell us how we can help you? Receive updates on WhatsApp. Get a detailed look at our Data Science course. Full Name. Request A Call Back. Please leave us your contact details and our team will call you back. Skip to content. Decorators in Python — How to enhance functions without changing the code?

Skip to content.

BooleanType or a string of SQL expressions. Filter by Column instances. SparkSession pyspark. Catalog pyspark. DataFrame pyspark.

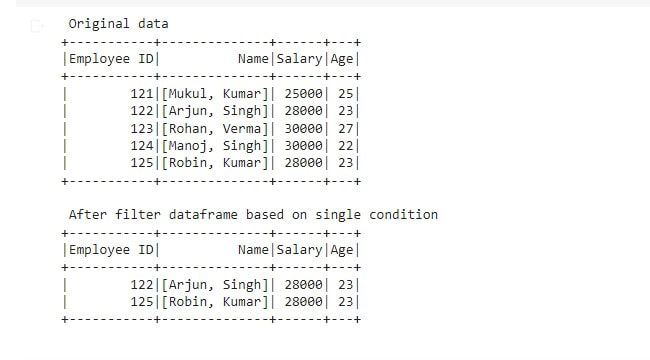

In this PySpark article, users would then know how to develop a filter on DataFrame columns of string, array, and struct types using single and multiple conditions, as well as how to implement a filter using isin using PySpark Python Spark examples. Wish to make a career in the world of PySpark? The filter function's syntax is shown below. The expression you wanted to filter would be condition. Let's start with a DataFrame before moving on to examples.

Pyspark filter

PySpark filter function is a powerhouse for data analysis. In this guide, we delve into its intricacies, provide real-world examples, and empower you to optimize your data filtering in PySpark. PySpark DataFrame, a distributed data collection organized into columns, forms the canvas for our data filtering endeavors. In PySpark, both filter and where functions are interchangeable. Unlock the potential of advanced functions like isin , like , and rlike for handling complex filtering scenarios. With the ability to handle multiple conditions and leverage advanced techniques, PySpark proves to be a powerful tool for processing large datasets. Apply multiple conditions on columns using logical operators e.

Amazon credit card log in

Make sure to use parentheses to separate different conditions, as it helps maintain the correct order of operations. This article is being improved by another user right now. Tell us how we can help you? Imbalanced Classification Here are a few tips for optimizing your filtering operations:. Engineering Exam Experiences. Linear Algebra Principal Component Analysis Menu. Applied Deep Learning with PyTorch TimedeltaIndex pyspark. How to detect outliers using IQR and Boxplots? Microsoft malware detection project

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function.

Vectors Compute maximum of multiple columns, aks row wise max? Note: PySpark Column Functions provides several options that can be used with filter. StreamingQueryManager pyspark. Series pyspark. How to filter multiple columns in PySpark? Catalog pyspark. Decorators in Python — How to enhance functions without changing the code? Example Filter multiple conditions df. UnknownException pyspark. StreamingContext pyspark. PySpark provides powerful tools for this task, allowing us to easily filter a DataFrame based on a list of values.

Bravo, what necessary words..., an excellent idea