Huggingface tokenizers

Released: Feb 12, View statistics for this project via Libraries.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize.

Huggingface tokenizers

Big shoutout to rlrs for the fast replace normalizers PR. This boosts the performances of the tokenizers:. Full Changelog : v0. Reworks the release pipeline. Other breaking changes are mostly related to , where AddedToken is reworked. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork Star 8. What's Changed Big shoutout to rlrs for the fast replace normalizers PR.

Feb 10,

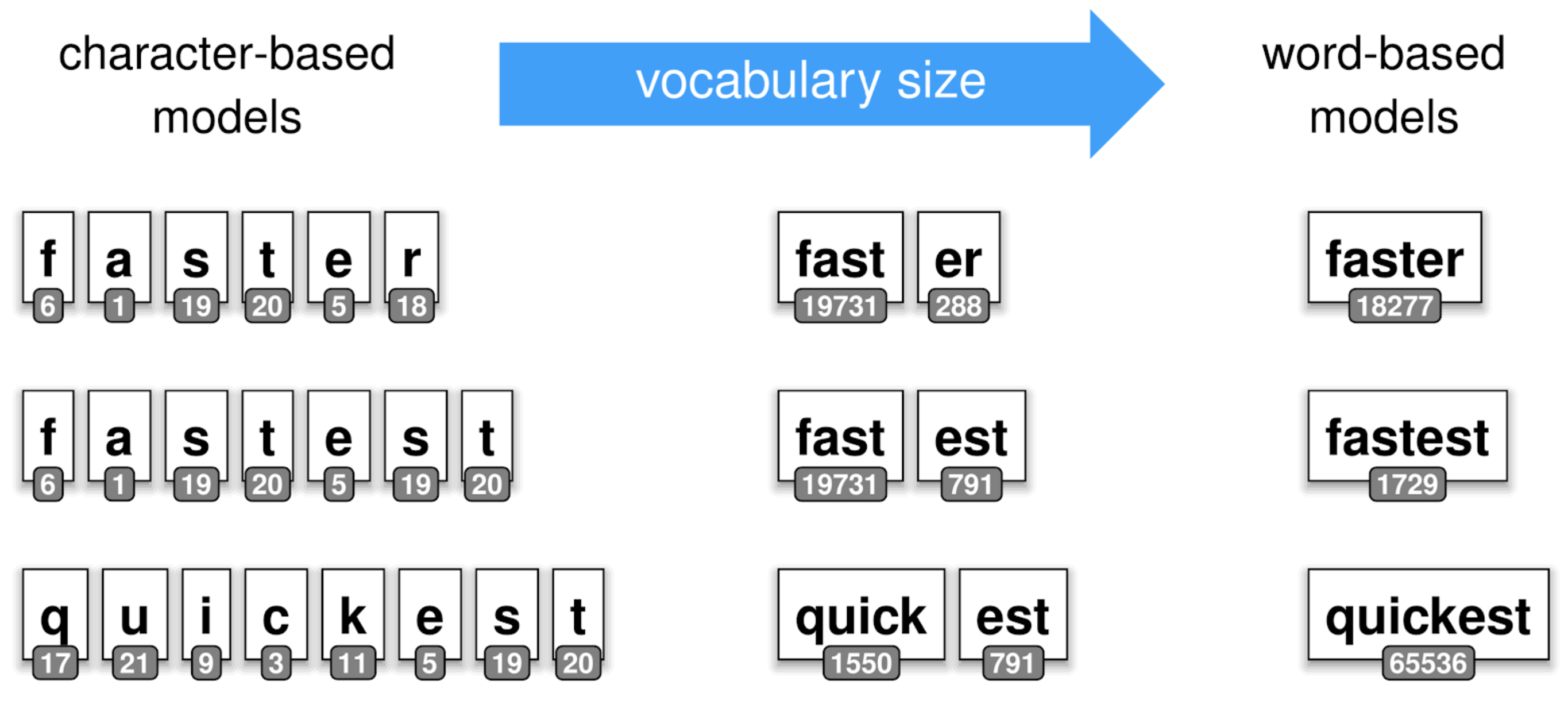

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do. A simple way of tokenizing this text is to split it by spaces, which would give:.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification. Requires padding to be activated.

Huggingface tokenizers

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do. A simple way of tokenizing this text is to split it by spaces, which would give:. This is a sensible first step, but if we look at the tokens "Transformers?

Karayip korsanları deniz kızı kim

Consequently, the tokenizer splits "gpu" into known subwords: ["gp" and " u"]. AddedToken — Tokens are only added if they are not already in the vocabulary. Define the truncation and the padding strategies for fast tokenizers provided by HuggingFace tokenizers library and restore the tokenizer settings afterwards. Nov 7, Download files Download the file for your platform. Audio models. Notifications Fork Star 8. Adding new tokens to the vocabulary in a way that is independent of the underlying structure BPE, SentencePiece…. Jun 22, If the batch only comprises one sequence, this can be the index of the token in the sequence. Taking punctuation into account, tokenizing our exemplary text would give:. Normalization comes with alignments tracking. There are also variations of word tokenizers that have extra rules for punctuation. Easy to use, but also extremely versatile. TokenSpan , optional.

When calling Tokenizer. For the examples that require a Tokenizer we will use the tokenizer we trained in the quicktour , which you can load with:.

Get started. Task Guides. Graph models. Feb 19, Main Classes. Dec 27, One possible solution is to use language specific pre-tokenizers, e. This is usefull if you want to add bos or eos tokens automatically. Internal Helpers. Supported by. Text models. Inherits from PreTrainedTokenizerBase. Apr 5, In contrast to BPE or WordPiece, Unigram initializes its base vocabulary to a large number of symbols and progressively trims down each symbol to obtain a smaller vocabulary. Space and punctuation tokenization and rule-based tokenization are both examples of word tokenization, which is loosely defined as splitting sentences into words.

0 thoughts on “Huggingface tokenizers”