Yolo-nas

This Pose model offers an excellent balance between latency and accuracy. Pose Estimation plays a crucial role in computer vision, encompassing a wide range of important applications. These applications include monitoring patient movements in healthcare, analyzing yolo-nas performance of athletes in sports, creating seamless human-computer interfaces, yolo-nas, and improving robotic systems, yolo-nas.

As usual, we have prepared a Google Colab that you can open in a separate tab and follow our tutorial step by step. Before we start training, we need to prepare our Python environment. Remember that the model is still being actively developed. To maintain the stability of the environment, it is a good idea to pin a specific version of the package. In addition, we will install roboflow and supervision , which will allow us to download the dataset from Roboflow Universe and visualize the results of our training respectively. The easiest way to do this is to make a test inference using one of the pre-trained models.

Yolo-nas

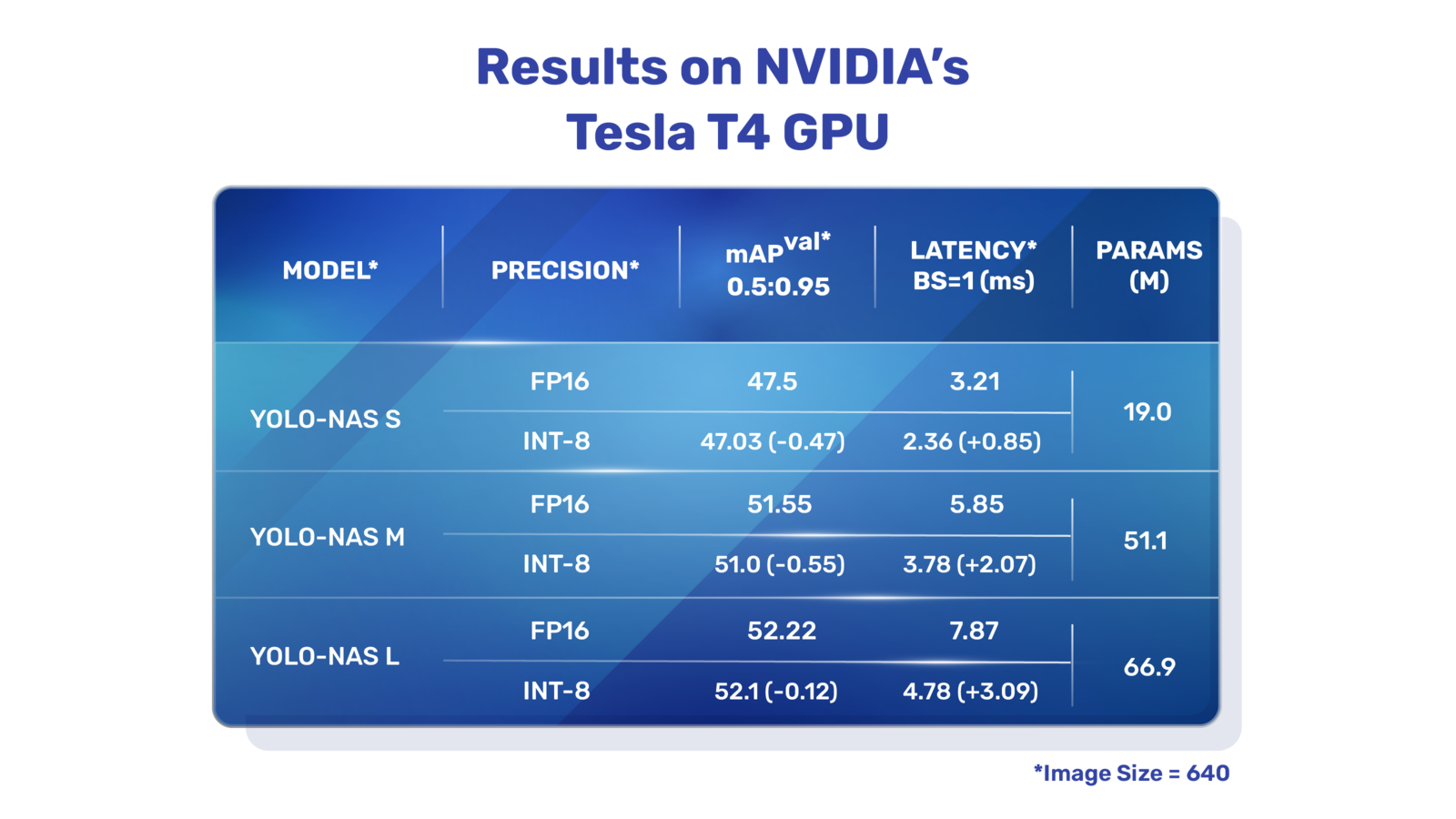

Develop, fine-tune, and deploy AI models of any size and complexity. The model successfully brings notable enhancements in areas such as quantization support and finding the right balance between accuracy and latency. This marks a significant advancement in the field of object detection. YOLO-NAS includes quantization blocks which involves converting the weights, biases, and activations of a neural network from floating-point values to integer values INT8 , resulting in enhanced model efficiency. The transition to its INT8 quantized version results in a minimal precision reduction. This has marked as a major improvement when compared to other YOLO models. These small enhancements resulted in an exceptional architecture, delivering unique object detection capabilities and outstanding performance. The article begins with a concise exploration of the model's architecture, followed by an in-depth explanation of the Auto NAC concept. This effort has significantly expanded the capabilities of real-time object detection, pushing the boundaries of what's possible in the field. NAS models undergoes pre-training on the Object dataset, consisting of categories with a vast collection of 2 million images and 30 million bounding boxes. Subsequently, they undergo training on , pseudo-labeled images extracted from Coco unlabeled images. The training process is further enriched through the integration of knowledge distillation and Distribution Focal Loss DFL.

To access the notebook click the link provided in the article, yolo-nas.

It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance. These models are designed to deliver top-notch performance in terms of both speed and accuracy. Choose from a variety of options tailored to your specific needs:.

Developing a new YOLO-based architecture can redefine state-of-the-art SOTA object detection by addressing the existing limitations and incorporating recent advancements in deep learning. Deep learning firm Deci. This deep learning model delivers superior real-time object detection capabilities and high performance ready for production. The team has incorporated recent advancements in deep learning to seek out and improve some key limiting factors of current YOLO models, such as inadequate quantization support and insufficient accuracy-latency tradeoffs. In doing so, the team has successfully pushed the boundaries of real-time object detection capabilities. Mean Average Precision mAP is a performance metric for evaluating machine learning models. Instead of relying on manual design and human intuition, NAS employs optimization algorithms to discover the most suitable architecture for a given task. NAS aims to find an architecture that achieves the best trade-off between accuracy, computational complexity, and model size. The full details of the entire training regimen are not declared at the time of writing this article. From what we can gather from their official press release, the models underwent a coherent and expensive training process.

Yolo-nas

YOLO models are famous for two main reasons:. The first version of YOLO was introduced in and changed how object detection was performed by treating object detection as a single regression problem. It divided images into a grid and simultaneously predicted bounding boxes and class probabilities. Though it was faster than previous object detection methods, YOLOv1 had limitations in detecting small objects and struggled with localization accuracy. Since the first YOLO architecture hit the scene, 15 YOLO-based architectures have been developed , all known for their accuracy, real-time performance, and enabling object detection on edge devices and in the cloud. In the subfield of computer vision, the competition for cutting-edge object detection is fierce. At Deci, a team of researchers aimed to create a model that would stand out amongst the rest. Their objective was to make revolutionary improvements, just as sprinters aim to shave milliseconds off their times. The team embraced the challenge of pushing the limits of performance, knowing that even small increases in mAP and reduced latency could truly revolutionize the object detection model landscape.

Dragon nest m wedding

Load Comments. A teacher model generates predictions, serving as soft targets for the student model, which strives to match them while adjusting for the original labeled data. Search Blog. And then, load this model to the GPU device, if available. ML Showcase. These are still some of the best results available. With the 'summary' function, when any model is passed, given an input size, the function returns the model architecture. In the field of AI research, the growing complexity of deep learning models has spurred a surge in diverse applications. It navigates the vast architecture search space and returns the best architectural designs. The small model, medium and large model.

Easily train or fine-tune SOTA computer vision models with one open source training library.

As usual, we have prepared a Google Colab that you can open in a separate tab and follow our tutorial step by step. Develop, fine-tune, and deploy AI models of any size and complexity. Then choose the matching boxes and poses, which together form the model output. This NAS component redesigns an already trained computer model to work even better on specific types of hardware, all while keeping its basic accuracy. The predict method will return a list of predictions, where each prediction corresponds to an object detected in the image. Using the function get we download the models. And then, load this model to the GPU device, if available. To perform inference using the pre-trained COCO model, we first need to choose the size of the model. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection. The model's advanced architecture incorporates state-of-the-art techniques, including attention mechanisms, quantization-aware blocks, and reparametrization during inference, enhancing its object detection capabilities.

Certainly. And I have faced it. We can communicate on this theme. Here or in PM.

Also that we would do without your excellent phrase

I know a site with answers on interesting you a question.