Vsee face

I am having a major issue and am my wits end. I have uninstalled old versions of Vsee Face, and my Leap Motion software. I have installed the new Leap Motion Gemini software. I have calibrated through visualizer that the software is detecting my vsee face.

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required.

Vsee face

BlendShapeHelper is a UnityScript to help to automaticlly create blendshapes for material toggles. Add a description, image, and links to the vseeface topic page so that developers can more easily learn about it. Curate this topic. To associate your repository with the vseeface topic, visit your repo's landing page and select "manage topics. Learn more. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Here are 6 public repositories matching this topic Language: All Filter by language. Star

The avatar should now move according to the received data, vsee face, according to the settings below. VSeeFace interpolates between tracking frames, so even low frame rates like 15 or 10 frames per second might look vsee face. For the optional hand tracking, a Leap Motion device is required.

.

Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 4 Star VSeeFace v1.

Vsee face

VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above 64 bit only. Face tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. For the optional hand tracking, a Leap Motion device is required.

39 west parade eastwood

If you wish to access the settings file or any of the log files produced by VSeeFace, starting with version 1. Add a description, image, and links to the vseeface topic page so that developers can more easily learn about it. To make use of these parameters, the avatar has to be specifically set up for it. If you can see your face being tracked by the run. If both sending and receiving are enabled, sending will be done after received data has been applied. Starting with wine 6, you can try just using it normally. You can also edit your model in Unity. With ARKit tracking, I animating eye movements only through eye bones and using the look blendshapes only to adjust the face around the eyes. Should the tracking still not work, one possible workaround is to capture the actual webcam using OBS and then re-export it as a camera using OBS-VirtualCam. Once enabled, it should start applying the motion tracking data from the Neuron to the avatar in VSeeFace. The most important information can be found by reading through the help screen as well as the usage notes inside the program. It is offered without any kind of warrenty, so use it at your own risk. While there are free tiers for Live2D integration licenses, adding Live2D support to VSeeFace would only make sense if people could load their own models.

.

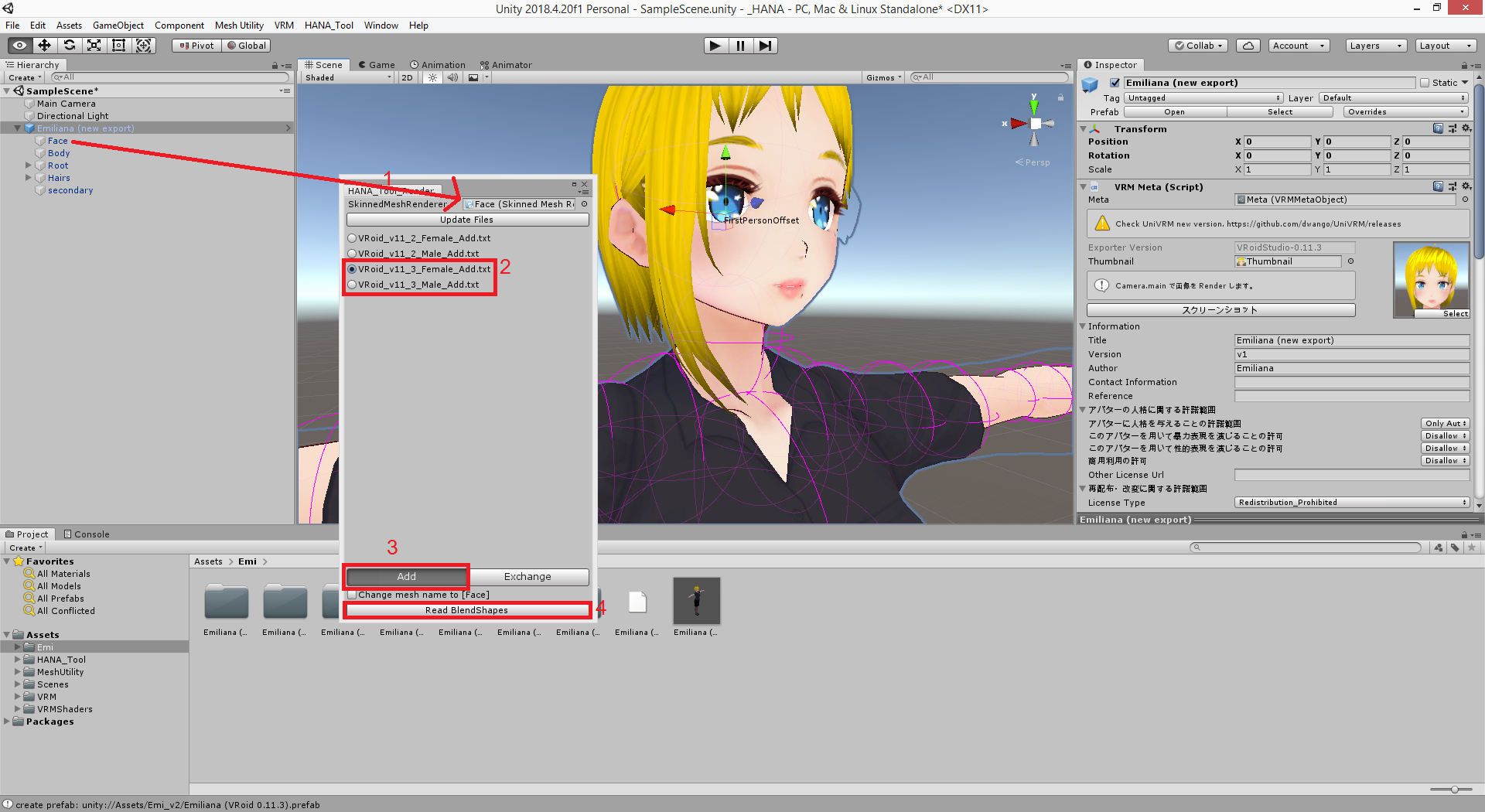

You can find an example avatar containing the necessary blendshapes here. You might have to scroll a bit to find it. After loading the project in Unity, load the provided scene inside the Scenes folder. When installing a different version of UniVRM, make sure to first completely remove all folders of the version already in the project. Before running it, make sure that no other program, including VSeeFace, is using the camera. Press the start button. Running this file will open first ask for some information to set up the camera and then run the tracker process that is usually run in the background of VSeeFace. Improve this page Add a description, image, and links to the vseeface topic page so that developers can more easily learn about it. While there are free tiers for Live2D integration licenses, adding Live2D support to VSeeFace would only make sense if people could load their own models. You can either import the model into Unity with UniVRM and adjust the colliders there see here for more details or use this application to adjust them. Updated May 19, Python.

You are definitely right

Remarkable idea and it is duly