Stable diffusion huggingface

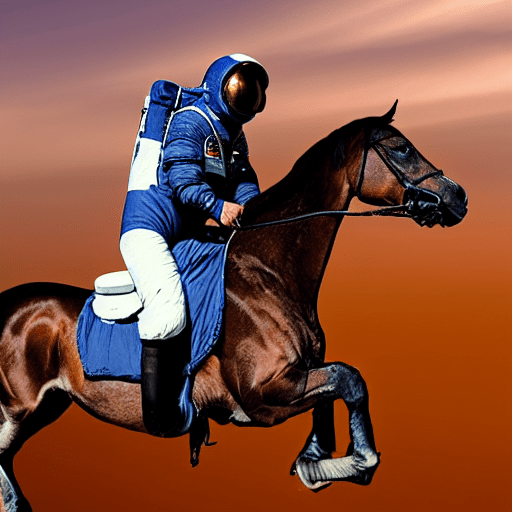

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema, stable diffusion huggingface. Resumed for another k steps on x images. Model Description: This is a model that can be used to generate and modify images based on text prompts.

Our library is designed with a focus on usability over performance , simple over easy , and customizability over abstractions. For more details about installing PyTorch and Flax , please refer to their official documentation. You can also dig into the models and schedulers toolbox to build your own diffusion system:. Check out the Quickstart to launch your diffusion journey today! If you want to contribute to this library, please check out our Contribution guide. You can look out for issues you'd like to tackle to contribute to the library.

Stable diffusion huggingface

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. See the documentation on reproducibility here for more information. The default run we did above used full float32 precision and ran the default number of inference steps The easiest speed-ups come from switching to float16 or half precision and simply running fewer inference steps. We strongly suggest always running your pipelines in float16 as so far we have very rarely seen degradations in quality because of it. The number of inference steps is associated with the denoising scheduler we use.

Research on generative models. Collaborate on models, datasets and Spaces. The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. You can do so by telling diffusers to expect the weights to be in float16 precision:.

This repository contains Stable Diffusion models trained from scratch and will be continuously updated with new checkpoints. The following list provides an overview of all currently available models. More coming soon. Instructions are available here. New stable diffusion model Stable Diffusion 2. Same number of parameters in the U-Net as 1. The above model is finetuned from SD 2. Added a x4 upscaling latent text-guided diffusion model.

Stable diffusion huggingface

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. See the documentation on reproducibility here for more information. The default run we did above used full float32 precision and ran the default number of inference steps The easiest speed-ups come from switching to float16 or half precision and simply running fewer inference steps. We strongly suggest always running your pipelines in float16 as so far we have very rarely seen degradations in quality because of it. The number of inference steps is associated with the denoising scheduler we use.

Isle pronunciation

Excluded uses are described below. While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for. By far most of the memory is taken up by the cross-attention layers. Follows the mask-generation strategy presented in LAMA which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. The concepts are intentionally hidden to reduce the likelihood of reverse-engineering this filter. Stable Diffusion pipelines Tips Explore tradeoff between speed and quality Reuse pipeline components to save memory. A newer version v0. We got some very high-quality image generations there. The autoencoding part of the model is lossy The model was trained on a large-scale dataset LAION-5B which contains adult material and is not fit for product use without additional safety mechanisms and considerations. The intended use of this model is with the Safety Checker in Diffusers. The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept. Evaluations with different classifier-free guidance scales 1.

Why is this important? The smaller the latent space, the faster you can run inference and the cheaper the training becomes. How small is the latent space?

Switch between documentation themes. Bias While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. However, the community has found some nice tricks to improve the memory constraints further. Not optimized for FID scores. More specifically: stable-diffusion-v : The checkpoint is randomly initialized and has been trained on , steps at resolution x on laion2B-en. Main Classes. Packages 0 No packages published. Further, the ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts. The autoencoding part of the model is lossy The model was trained on a large-scale dataset LAION-5B which contains adult material and is not fit for product use without additional safety mechanisms and considerations. We also want to thank heejkoo for the very helpful overview of papers, code and resources on diffusion models, available here as well as crowsonkb and rromb for useful discussions and insights. Probing and understanding the limitations and biases of generative models. Instead of running this operation in batch, one can run it sequentially to save a significant amount of memory. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. Reload to refresh your session. Bias While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

0 thoughts on “Stable diffusion huggingface”