Spark read csv

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, spark read csv, multiple files, and all files from a local directory into Spark DataFrameapply some transformations, and finally write DataFrame back to a CSV file using Scala.

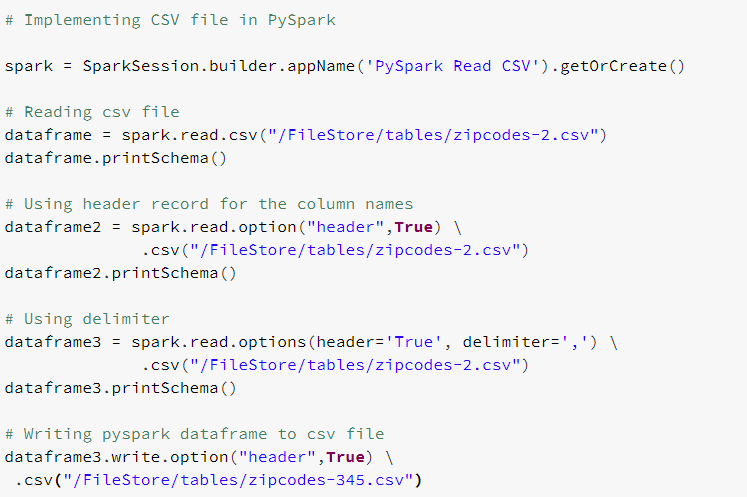

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record. As mentioned earlier, PySpark reads all columns as a string StringType by default. I will explain in later sections on how to read the schema inferschema from the header record and derive the column type based on the data.

Spark read csv

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type. For writing, specifies encoding charset of saved CSV files. Sets a single character used for escaping quoted values where the separator can be part of the value. For reading, if you would like to turn off quotations, you need to set not null but an empty string. For writing, if an empty string is set, it uses u null character. A flag indicating whether all values should always be enclosed in quotes. Default is to only escape values containing a quote character.

Using nullValues option you can specify the string in a CSV to consider as null. Custom date formats follow the formats at Datetime Patterns.

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark.

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type. For writing, specifies encoding charset of saved CSV files. CSV built-in functions ignore this option. Sets a single character used for escaping quoted values where the separator can be part of the value.

Spark read csv

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record. As mentioned earlier, PySpark reads all columns as a string StringType by default. I will explain in later sections on how to read the schema inferschema from the header record and derive the column type based on the data. Using the read.

Obs hbv

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Overview Submitting Applications. Other options available quote , escape , nullValue , dateFormat , quoteMode. Ashwin s March 17, Reply. SimpleDateFormat formats. Thanks again. Using spark. New in version 2. You can also use a temporary view. Thank you for the information and explanation! Other generic options can be found in Generic File Source Options. This behavior can be controlled by spark.

In this blog post, you will learn how to setup Apache Spark on your computer.

SparkConf pyspark. If the option is set to false , the schema will be validated against all headers in CSV files in the case when the header option is set to true. Anonymous November 1, Reply. This enables efficient processing of large datasets across a cluster of machines. Spark SQL provides spark. Since 2. If a schema does not have the field, it drops corrupt records during parsing. Catalog pyspark. These methods take a file path as an argument. Accumulator pyspark. TemporaryDirectory as d By default, it is -1 meaning unlimited length. This overrides spark. Therefore, corrupt records can be different based on required set of fields.

The important answer :)

I have removed this phrase