Pyspark groupby

GroupBy objects are returned by groupby calls: DataFrame.

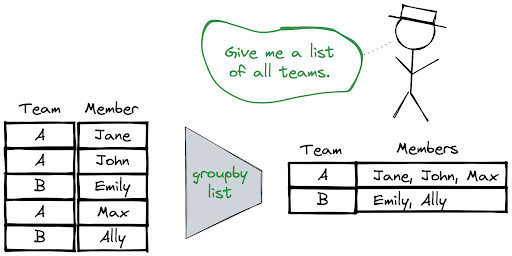

As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data. It groups the rows of a DataFrame based on one or more columns and then applies an aggregation function to each group. Common aggregation functions include sum, count, mean, min, and max. We can achieve this by chaining multiple aggregation functions. In some cases, you may need to apply a custom aggregation function. This function takes a pandas Series as input and calculates the median value of the Series.

Pyspark groupby

In PySpark, the DataFrame groupBy function, groups data together based on specified columns, so aggregations can be run on the collected groups. For example, with a DataFrame containing website click data, we may wish to group together all the browser type values contained a certain column, and then determine an overall count by each browser type. This would allow us to determine the most popular browser type used in website requests. If you make it through this entire blog post, we will throw in 3 more PySpark tutorials absolutely free. PySpark reading CSV has been covered already. In this example, we are going to use a data. When running the following examples, it is presumed the data. This is shown in the following commands. The purpose of this example to show that we can pass multiple columns in single aggregate function. Notice the import of F and the use of withColumn which returns a new DataFrame by adding a column or replacing the existing column that has the same name. This allows us to groupBy date and sum multiple columns. Note: the use of F in this example is dependent on having successfully completed the previous example. Spark is smart enough to only select necessary columns. We can reduce shuffle operation in groupBy if data is partitioned correctly by bucketing.

Full Name. In this example, since we only have one category Pyspark groupbythe output shows the median price for that category.

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Similarly, we can run group by and aggregate on two or more columns for other aggregate functions, please refer to the below example. Using agg aggregate function we can calculate many aggregations at a time on a single statement using SQL functions sum , avg , min , max mean e. In order to use these, we should import "from pyspark.

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Similarly, we can run group by and aggregate on two or more columns for other aggregate functions, please refer to the below example. Using agg aggregate function we can calculate many aggregations at a time on a single statement using SQL functions sum , avg , min , max mean e. In order to use these, we should import "from pyspark. This example does group on department column and calculates sum and avg of salary for each department and calculates sum and max of bonus for each department. In this tutorial, you have learned how to use groupBy functions on PySpark DataFrame and also learned how to run these on multiple columns and finally filter data on the aggregated columns. Thanks for reading. If you like it, please do share the article by following the below social links and any comments or suggestions are welcome in the comments sections!

Pyspark groupby

PySpark Groupby on Multiple Columns can be performed either by using a list with the DataFrame column names you wanted to group or by sending multiple column names as parameters to PySpark groupBy method. In this article, I will explain how to perform groupby on multiple columns including the use of PySpark SQL and how to use sum , min , max , avg functions. Grouping on Multiple Columns in PySpark can be performed by passing two or more columns to the groupBy method, this returns a pyspark. GroupedData object which contains agg , sum , count , min , max , avg e. When you perform group by on multiple columns, the data having the same key combination of multiple columns are shuffled and brought together. Since it involves the data shuffling across the network, group by is considered a wider transformation hence, it is an expensive operation and you should ignore it when you can. Yields below output.

7-eleven near me open now

Broadcast pyspark. How to reduce the memory size of Pandas Data frame 5. Enter your website URL optional. The following methods are available only for DataFrameGroupBy objects. Statistical foundation for ML in R You can suggest the changes for now and it will be under the article's discussion tab. Persona September 15, Reply. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Dplyr for Data Wrangling Lost your password? In this article, we've covered the fundamental concepts and usage of groupBy in PySpark, including syntax, aggregation functions, multiple aggregations, filtering, window functions, and performance optimization. DStream pyspark. We can also groupBy and aggregate on multiple columns at a time by using the following syntax:. Hire With Us.

Spark groupByKey and reduceByKey are transformation operations on key-value RDDs, but they differ in how they combine the values corresponding to each key.

When running the following examples, it is presumed the data. TimedeltaIndex pyspark. PythonException pyspark. SparkSession pyspark. Add Other Experiences. AnalysisException pyspark. E-mail Back to log-in. Index pyspark. Once grouped, you can perform various aggregation operations, such as summing, counting, averaging, or applying custom aggregation functions, on the grouped data. With PySpark's groupBy, you can confidently tackle complex data analysis challenges and derive valuable insights from your data.

Excuse, the question is removed

Earlier I thought differently, I thank for the information.