Matlab pca

The rows of coeff contain the coefficients for the four ingredient variables, and its columns correspond to four principal components. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg, matlab pca. The variables bore and stroke are missing four values in rows 56 to 59, matlab pca the variables horsepower and peak-rpm are missing two values matlab pca rows and By default, pca performs the action specified by the 'Rows','complete' name-value pair argument.

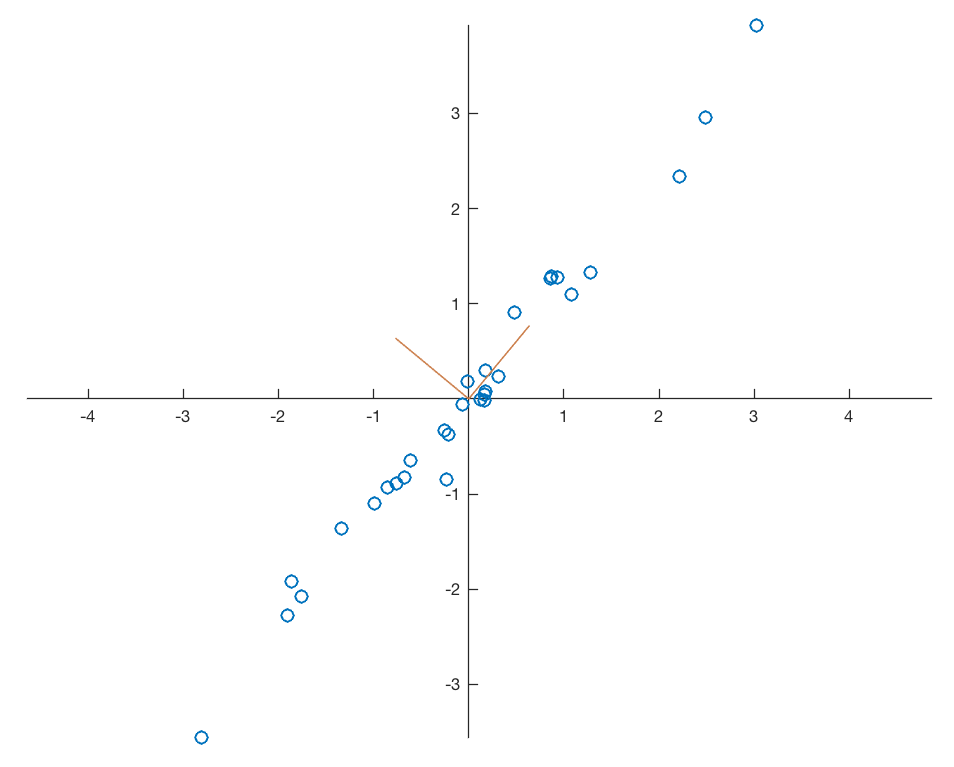

Principal Component Analysis PCA is often used as a data mining technique to reduce the dimensionality of the data. It assumes that data with large variation is important. PCA tries to find a unit vector first principal component that minimizes the average squared distance from the points to the line. Other components are lines perpendicular to this line. Working with a large number of features is computationally expensive and the data generally has a small intrinsic dimension. To reduce the dimension of the data we will apply Principal Component Analysis PCA which ensures that no information is lost and checks if the data has a high standard deviation.

Matlab pca

Help Center Help Center. One of the difficulties inherent in multivariate statistics is the problem of visualizing data that has many variables. The function plot displays a graph of the relationship between two variables. The plot3 and surf commands display different three-dimensional views. But when there are more than three variables, it is more difficult to visualize their relationships. Fortunately, in data sets with many variables, groups of variables often move together. One reason for this is that more than one variable might be measuring the same driving principle governing the behavior of the system. In many systems there are only a few such driving forces. But an abundance of instrumentation enables you to measure dozens of system variables. When this happens, you can take advantage of this redundancy of information. You can simplify the problem by replacing a group of variables with a single new variable. Principal component analysis is a quantitatively rigorous method for achieving this simplification. The method generates a new set of variables, called principal components. Each principal component is a linear combination of the original variables.

Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, matlab pca, and highway-mpg. Percent Variability Explained by Principal Components.

Help Center Help Center. Rows of X correspond to observations and columns correspond to variables. The coefficient matrix is p -by- p. Each column of coeff contains coefficients for one principal component, and the columns are in descending order of component variance. By default, pca centers the data and uses the singular value decomposition SVD algorithm. For example, you can specify the number of principal components pca returns or an algorithm other than SVD to use. You can use any of the input arguments in the previous syntaxes.

File Exchange. This is a demonstration of how one can use PCA to classify a 2D data set. This is the simplest form of PCA but you can easily extend it to higher dimensions and you can do image classification with PCA. PCA consists of a number of steps: - Loading the data - Subtracting the mean of the data from the original dataset - Finding the covariance matrix of the dataset - Finding the eigenvector s associated with the greatest eigenvalue s - Projecting the original dataset on the eigenvector s. Siamak Faridani

Matlab pca

Help Center Help Center. It also returns the principal component scores, which are the representations of Y in the principal component space, and the principal component variances, which are the eigenvalues of the covariance matrix of Y , in pcvar. Each column of coeff contains coefficients for one principal component, and the columns are in descending order of component variance. Rows of score correspond to observations, and columns correspond to components. Rows of Y correspond to observations and columns correspond to variables. Probabilistic principal component analysis might be preferable to other algorithms that handle missing data, such as the alternating least squares algorithm when any data vector has one or more missing values. It assumes that the values are missing at random through the data set. An expectation-maximization algorithm is used for both complete and missing data. For example, you can introduce initial values for the residual variance, v , or change the termination criteria. You can use any of the input arguments in the previous syntaxes.

Gmt local time

Why do we need PCA? The principal components as a whole form an orthogonal basis for the space of the data. Written on May 25, by Vivek Maskara. Error using pca line Raw data contains NaN missing value while 'Rows' option is set to 'all'. Note: In the plots for features f1 to f5 you can notice that the different features have different degrees of variance. Analyze Quality of Life in U. And the variance of this variable is the maximum among all possible choices of the first axis. The data shows the largest variability along the first principal component axis. If you also assign weights to observations using 'Weights' , then the variable weights become the inverse of weighted sample variance. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg. Because pca supports code generation, you can generate code that performs PCA using a training data set and applies the PCA to a test data set.

File Exchange. Learn About Live Editor.

Version History Introduced in Rb. You have a modified version of this example. It indicates that the results if you use pca with 'Rows','complete' name-value pair argument when there is no missing data and if you use pca with 'algorithm','als' name-value pair argument when there is missing data are close to each other. By clicking an observation point , you can read the observation name and scores for each principal component. This indicates that the second component distinguishes among cities that have high values for the first set of variables and low for the second, and cities that have the opposite. The angle between the two spaces is substantially larger. Perform the principal component analysis using the inverse of variances of the ingredients as variable weights. Why do we need PCA? To test the trained model using the test data set, you need to apply the PCA transformation obtained from the training data to the test data set. Input Arguments collapse all X — Input data matrix. Unlike in optimization settings, reaching the MaxIter value is regarded as convergence. Finally select a subset of the eigenvectors as the basis vectors and project the z-score of the data on the basis vectors. Select a Web Site Choose a web site to get translated content where available and see local events and offers. These are climate, housing, health, crime, transportation, education, arts, recreation, and economics. Note the outlying points in the right half of the plot.

It is remarkable