Jobs databricks

Send us feedback.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. To learn about configuration options for jobs and how to edit your existing jobs, see Configure settings for Azure Databricks jobs. To learn how to manage and monitor job runs, see View and manage job runs. To create your first workflow with an Azure Databricks job, see the quickstart. The Tasks tab appears with the create task dialog along with the Job details side panel containing job-level settings. In the Type drop-down menu, select the type of task to run. See Task type options.

Jobs databricks

.

Jobs databricks total cell output exceeds 20MB in size, or if the output of an individual cell is larger than 8MB, the run is canceled and marked as failed.

.

Send us feedback. To learn about configuration options for jobs and how to edit your existing jobs, see Configure settings for Databricks jobs. To learn how to manage and monitor job runs, see View and manage job runs. To create your first workflow with a Databricks job, see the quickstart. A workspace is limited to concurrent task runs.

Jobs databricks

If you still have questions or prefer to get help directly from an agent, please submit a request. Please enter the details of your request. A member of our support staff will respond as soon as possible. Last updated: May 10th, by Adam Pavlacka. Last updated: April 17th, by Adam Pavlacka. Job clusters have a maximum notebook output size of 20 MB.

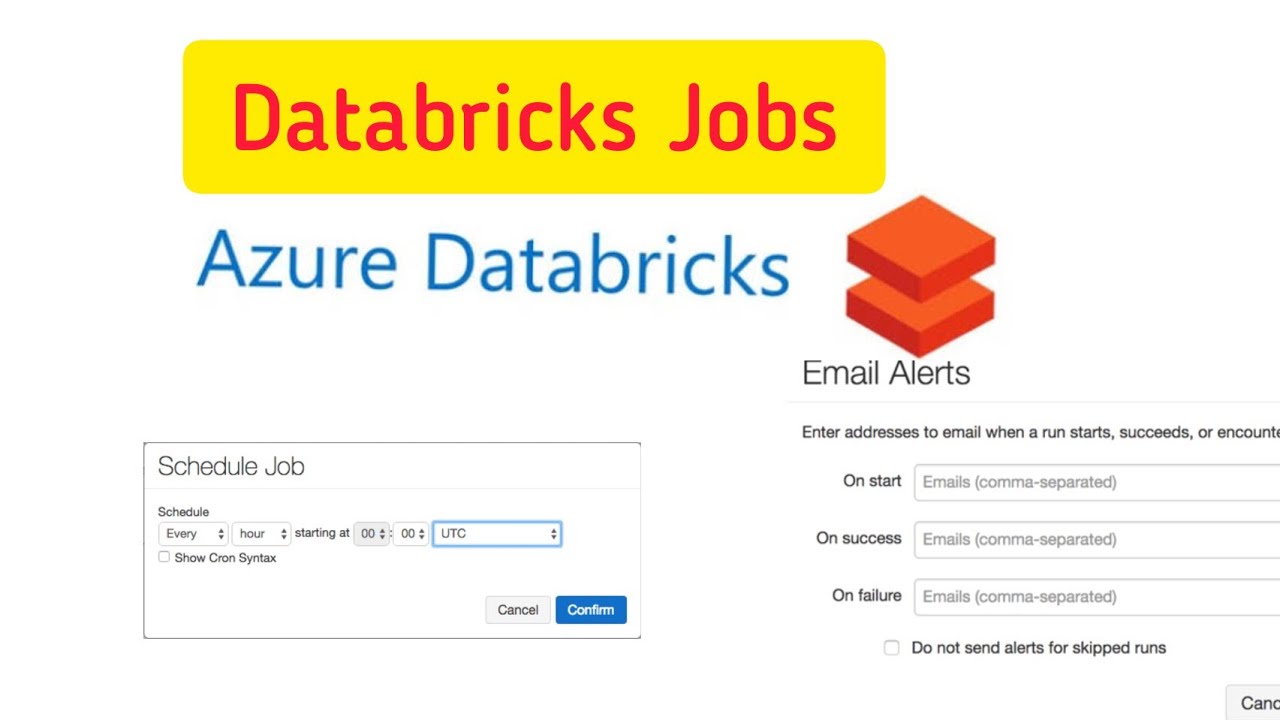

London temp november

See Use a notebook from a remote Git repository. See Configuring JAR job parameters. A warning is shown in the UI if you attempt to add a task parameter with the same key as a job parameter. You can use a schedule to automatically run your Azure Databricks job at specified times and periods. JAR : Specify the Main class. Databricks does not support jobs with circular dependencies or that nest more than three Run Job tasks and might not allow running these jobs in future releases. When capacity is available, the job run is dequeued and run. By default, jobs run as the identity of the job owner. Run a job on a schedule You can use a schedule to automatically run your Databricks job at specified times and periods. Failure notifications are sent on initial task failure and any subsequent retries. If your job runs SQL queries using the SQL task, the identity used to run the queries is determined by the sharing settings of each query, even if the job runs as a service principal. If your job runs SQL queries using the SQL task, the identity used to run the queries is determined by the sharing settings of each query, even if the job runs as a service principal. Configure the cluster where the task runs. Shared access mode is not supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Run Job : In the Job drop-down menu, select a job to be run by the task. See Configure a retry policy for a task. Spark-submit does not support cluster autoscaling. Failure notifications are sent on initial task failure and any subsequent retries. If you are using a Unity Catalog-enabled cluster, spark-submit is supported only if the cluster uses the assigned access mode. Additionally, individual cell output is subject to an 8MB size limit. To see an example of reading positional arguments in a Python script, see Step 2: Create a script to fetch GitHub data. By default, jobs run as the identity of the job owner. To create your first workflow with an Azure Databricks job, see the quickstart. See Re-run failed and skipped tasks. Configure the cluster where the task runs. You can also configure a cluster for each task when you create or edit a task. To learn more about autoscaling, see Cluster autoscaling. Send us feedback.

As the expert, I can assist.

I consider, that you are not right. I am assured. I can defend the position. Write to me in PM, we will discuss.

I am sorry, that I interrupt you, but, in my opinion, there is other way of the decision of a question.