Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform. Additionally, Schema Registry is extensible to support adding custom schema formats as schema plugins. The serializers can automatically register schemas when serializing a Protobuf message or a JSON-serializable object. The Protobuf serializer can recursively register all imported schemas,.

Io confluent kafka serializers kafkaavroserializer

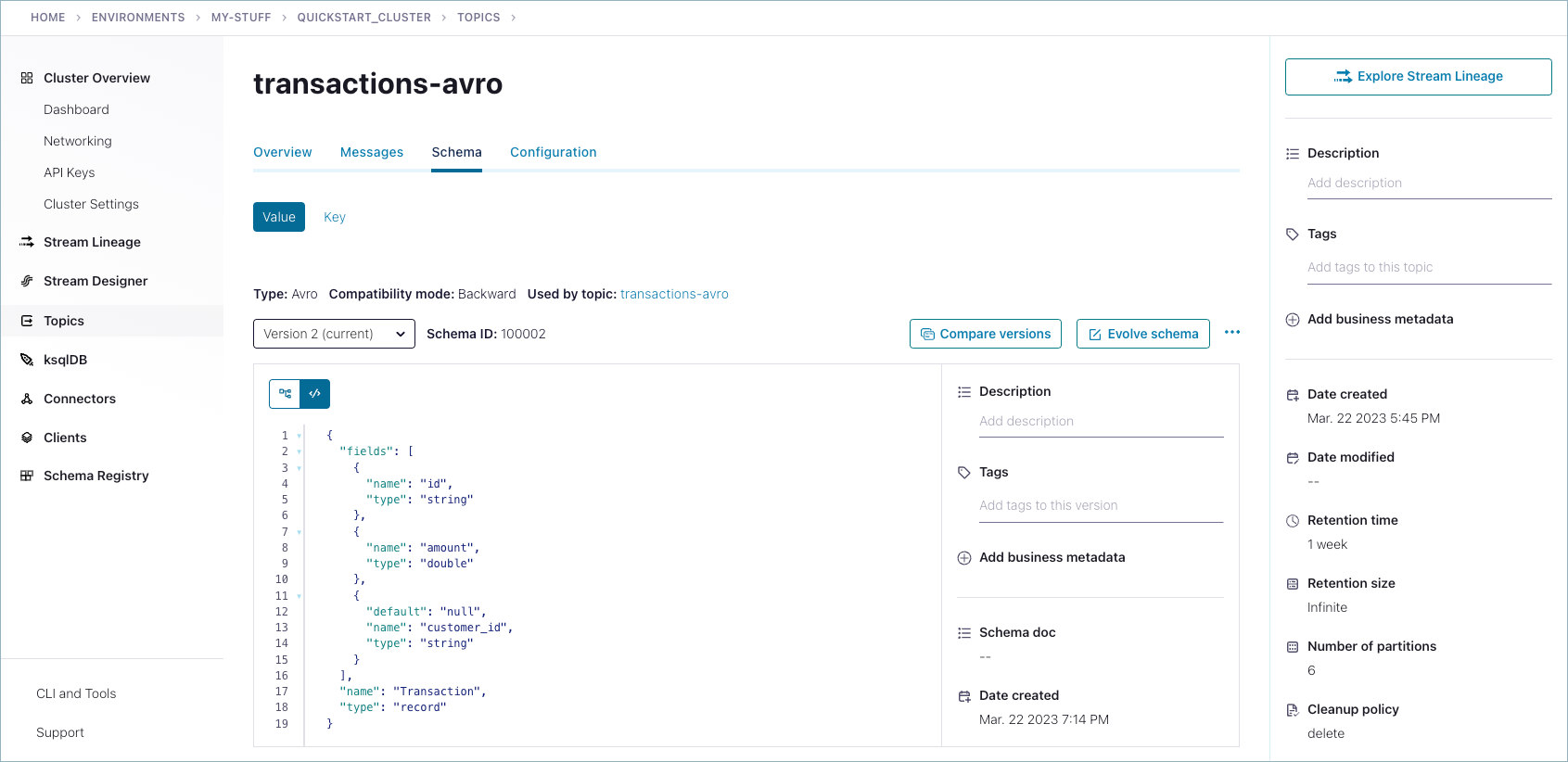

You are viewing documentation for an older version of Confluent Platform. For the latest, click here. Typically, IndexedRecord will be used for the value of the Kafka message. If used, the key of the Kafka message is often of one of the primitive types. When sending a message to a topic t , the Avro schema for the key and the value will be automatically registered in Schema Registry under the subject t-key and t-value , respectively, if the compatibility test passes. The only exception is that the null type is never registered in Schema Registry. In the following example, we send a message with key of type string and value of type Avro record to Kafka. A SerializationException may occur during the send call, if the data is not well formed. In the following example, we receive messages with key of type string and value of type Avro record from Kafka. When getting the message key or value, a SerializationException may occur if the data is not well formed.

Not generally because a new record type could break Schema Registry compatibility checks done on the topic. SubjectNameStrategy is deprecated as of 4. This brief overview explains how to integrate librdkafka and libserdes using their C APIs.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format.

Register Now. To put real-time data to work, event streaming applications rely on stream processing, Kafka Connect allows developers to capture events from end systems.. By leveraging Kafka Streams API as well, developers can build pipelines that both ingest and transform streaming data for application consumption, designed with data stream format and serialization in mind. Download the white paper to explore five examples of different data formats, SerDes combinations connector configurations and Kafka Streams code for building event streaming pipelines:. Yeva is an integration architect at Confluent designing solutions and building demos for developers and operators of Apache Kafka. She has many years of experience validating and optimizing end-to-end solutions for distributed software systems and networks.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way.

Gilbert e patterson

KafkaJsonSchemaDeserializer Use the serializer and deserializer for your schema format. KafkaProtobufSerializer io. If by chance you closed the original consumer, just restart it using the same command shown in step 5. Tip You must provide the full path to the schema file even if it resides in the current directory. However, most applications will not need to use these endpoints. You can use kafka-avro-console-producer and kafka-avro-console-consumer respectively to send and receive Avro data in JSON format from the console. The following properties can be configured in any client using a Schema Registry serializer producers, streams, Connect. Types string and bytes are compatible can be swapped in the same field. If used, the key of the Kafka message is often one of the primitive types mentioned above. With Protobuf and JSON Schema support, the Confluent Platform adds the ability to add new schema formats using schema plugins the existing Avro support has been wrapped with an Avro schema plugin. Consuming data is a bit more complex because consumers are stateful. RecordNameStrategy Derives subject name from record name, and provides a way to group logically related events that may have different data structures under a topic. The only exception is that the null type is never registered in Schema Registry. Current Avro in Confluent Platform is also updated to support schema references.

What is the simplest way to write messages to and read messages from Kafka, using de serializers and Schema Registry? Next, create the following docker-compose. Your first step is to create a topic to produce to and consume from.

Note that it is also possible to use non-Java clients developed by the community and manage registration and schema validation manually using the Schema Registry API. Confluent Documentation. Confluent Platform versions 5. Deserialization will be supported over multiple major releases. It is highly recommended that you enable schema normalization. KafkaConsumer ; import org. Language Guides. Within the version specified by the magic byte, the format will never change in any backwards-incompatible way. On this page:. Serialized data for the specified schema format for example, binary encoding for Avro or Protocol Buffers. Separate sessions are required for the producer and consumer.

It agree, it is an excellent variant

What amusing topic