Huggingface stable diffusion

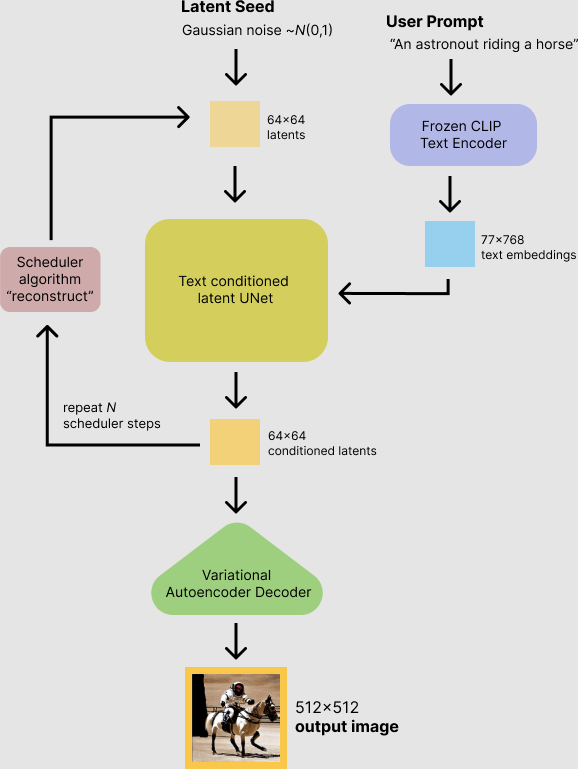

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input.

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. See the documentation on reproducibility here for more information. The default run we did above used full float32 precision and ran the default number of inference steps

Huggingface stable diffusion

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. You can do so by telling diffusers to expect the weights to be in float16 precision:. Note : If you are limited by TPU memory, please make sure to load the FlaxStableDiffusionPipeline in bfloat16 precision instead of the default float32 precision as done above. You can do so by telling diffusers to load the weights from "bf16" branch. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes. The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:.

Additionally, the community has started fine-tuning many of the above versions on certain styles with some of them having an extremely huggingface stable diffusion quality and gaining a lot of traction. Join the Hugging Face community.

Welcome to this Hugging Face Inference Endpoints guide on how to deploy Stable Diffusion to generate images for a given input prompt. This guide will not explain how the model works. It supports all the Transformers and Sentence-Transformers tasks as well as diffusers tasks and any arbitrary ML Framework through easy customization by adding a custom inference handler. This custom inference handler can be used to implement simple inference pipelines for ML Frameworks like Keras, Tensorflow, and sci-kit learn or to add custom business logic to your existing transformers pipeline. The first step is to deploy our model as an Inference Endpoint. Therefore we add the Hugging face repository Id of the Stable Diffusion model we want to deploy. Note: If the repository is not showing up in the search it might be gated, e.

We present SDXL, a latent diffusion model for text-to-image synthesis. Compared to previous versions of Stable Diffusion, SDXL leverages a three times larger UNet backbone: The increase of model parameters is mainly due to more attention blocks and a larger cross-attention context as SDXL uses a second text encoder. We design multiple novel conditioning schemes and train SDXL on multiple aspect ratios. We also introduce a refinement model which is used to improve the visual fidelity of samples generated by SDXL using a post-hoc image-to-image technique. We demonstrate that SDXL shows drastically improved performance compared the previous versions of Stable Diffusion and achieves results competitive with those of black-box state-of-the-art image generators. Check out the Stability AI Hub organization for the official base and refiner model checkpoints! This model inherits from DiffusionPipeline.

Huggingface stable diffusion

Why is this important? The smaller the latent space, the faster you can run inference and the cheaper the training becomes. How small is the latent space? Stable Diffusion uses a compression factor of 8, resulting in a x image being encoded to x Stable Cascade achieves a compression factor of 42, meaning that it is possible to encode a x image to 24x24, while maintaining crisp reconstructions. The text-conditional model is then trained in the highly compressed latent space.

Equipment finance brokers bc

The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. Downloads last month , Sexual content without consent of the people who might see it. Seems like the difference is only very minor, but the new generations are arguably a bit sharper. Additionally, it is currently only possible to deploy gated repositories from user accounts and not within organizations. Nice, it works. Downloads last month 0. Further, the ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts. Generation of artworks and use in design and other artistic processes. By far most of the memory is taken up by the cross-attention layers. The easiest is to keep the suggested defaults from the application. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Further, the ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts. These tips are applicable to all Stable Diffusion pipelines. Sign Up to get started.

Our library is designed with a focus on usability over performance , simple over easy , and customizability over abstractions. Learn the fundamental skills you need to start generating outputs, build your own diffusion system, and train a diffusion model.

However, the community has found some nice tricks to improve the memory constraints further. The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept. Memory and Speed Torch2. Next, we can also try to optimize single components of the pipeline, e. Downloads last month 0. Conceptual Guides. The easiest way to see how many images you can generate at once is to try out different batch sizes until you get an OutOfMemoryError OOM. Misuse and Malicious Use Using the model to generate content that is cruel to individuals is a misuse of this model. Not optimized for FID scores. Taking Diffusers Beyond Images. Each Inference Endpoint comes with an inference widget similar to the ones you know from the Hugging Face Hub. Diffusers documentation Stable Video Diffusion.

This situation is familiar to me. I invite to discussion.