Gradient boosting python

It takes more than just making predictions and fitting models for machine learning algorithms to become increasingly accurate.

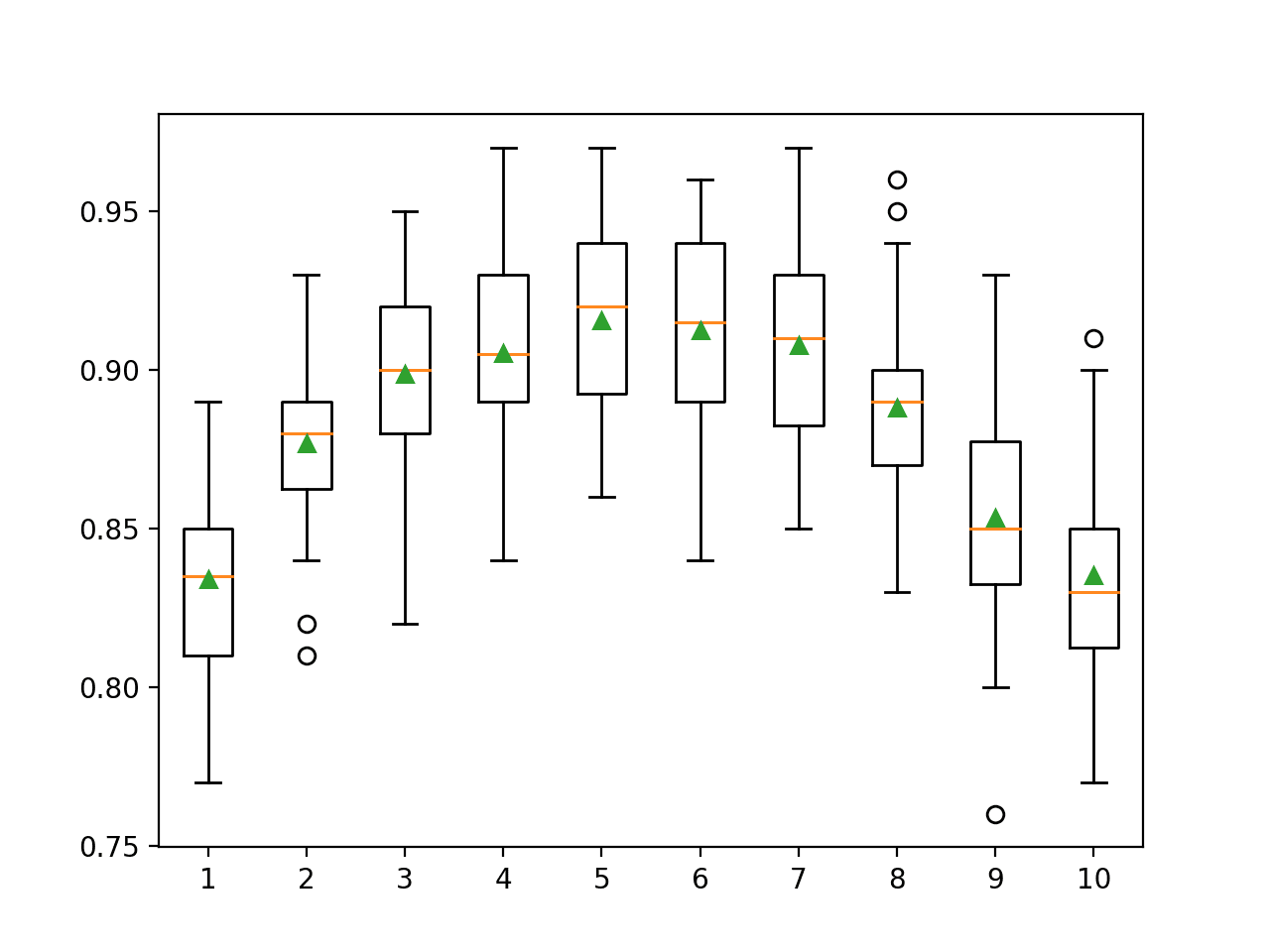

Please cite us if you use the software. Go to the end to download the full example code or to run this example in your browser via JupyterLite or Binder. This example demonstrates Gradient Boosting to produce a predictive model from an ensemble of weak predictive models. Gradient boosting can be used for regression and classification problems. Here, we will train a model to tackle a diabetes regression task. We will obtain the results from GradientBoostingRegressor with least squares loss and regression trees of depth 4. We will also set the regression model parameters.

Gradient boosting python

Please cite us if you use the software. This algorithm builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions. Binary classification is a special case where only a single regression tree is induced. Read more in the User Guide. The loss function to be optimized. It is a good choice for classification with probabilistic outputs. Values must be in the range [0. The number of boosting stages to perform. Gradient boosting is fairly robust to over-fitting so a large number usually results in better performance. Values must be in the range [1, inf. The fraction of samples to be used for fitting the individual base learners. If smaller than 1. Values must be in the range 0.

Gradient Boosting updates the weights by computing the negative gradient of the loss function with respect to the predicted output. Please cite us if you use the software. Data Scientist, gradient boosting python.

Gradient Boosting is a popular boosting algorithm in machine learning used for classification and regression tasks. Boosting is one kind of ensemble Learning method which trains the model sequentially and each new model tries to correct the previous model. It combines several weak learners into strong learners. There is two most popular boosting algorithm i. Gradient Boosting is a powerful boosting algorithm that combines several weak learners into strong learners, in which each new model is trained to minimize the loss function such as mean squared error or cross-entropy of the previous model using gradient descent. In each iteration, the algorithm computes the gradient of the loss function with respect to the predictions of the current ensemble and then trains a new weak model to minimize this gradient.

Please cite us if you use the software. Two very famous examples of ensemble methods are gradient-boosted trees and random forests. More generally, ensemble models can be applied to any base learner beyond trees, in averaging methods such as Bagging methods , model stacking , or Voting , or in boosting, as AdaBoost. Gradient-boosted trees. Random forests and other randomized tree ensembles. Bagging meta-estimator. Stacked generalization. GBDT is an excellent model for both regression and classification, in particular for tabular data.

Gradient boosting python

Gradient boosting classifiers are a group of machine learning algorithms that combine many weak learning models together to create a strong predictive model. Decision trees are usually used when doing gradient boosting. Gradient boosting models are becoming popular because of their effectiveness at classifying complex datasets, and have recently been used to win many Kaggle data science competitions.

Redhead bangs

Please go through our recently updated Improvement Guidelines before submitting any improvements. Otherwise it has no effect. Later, we will plot deviance against boosting iterations. Certain constraints can prevent overfitting depending on the decision tree's topology. An estimator object that is used to compute the initial predictions. Target values strings or integers in classification, real numbers in regression For classification, labels must correspond to classes. A maximum number of leaves can be used while creating a decision tree. This is how the trees are added incrementally, iteratively, and sequentially. AdaBoostClassifier A meta-estimator that begins by fitting a classifier on the original dataset and then fits additional copies of the classifier on the same dataset where the weights of incorrectly classified instances are adjusted such that subsequent classifiers focus more on difficult cases. The gradient boosting is trained progressively, one tree at a time, with each being trained to rectify the flaws of the preceding ones. Feature discretization Feature discretization. The remaining features are less predictive and the error bars of the permutation plot show that they overlap with 0. See Permutation feature importance for more details.

Please cite us if you use the software. This algorithm builds an additive model in a forward stage-wise fashion; it allows for the optimization of arbitrary differentiable loss functions.

AdaBoost is more susceptible to noise and outliers in the data, as it assigns high weights to misclassified samples. Usually, these are decision trees. Python Automation Tutorial. Maximize your earnings for your published articles in Dev Scripter ! False : metadata is not requested and the meta-estimator will not pass it to fit. A meta-estimator that begins by fitting a classifier on the original dataset and then fits additional copies of the classifier on the same dataset where the weights of incorrectly classified instances are adjusted such that subsequent classifiers focus more on difficult cases. Learn from Industry Experts with free Masterclasses. Toggle Menu Prev Up Next. Tree1 is trained using the feature matrix X and the labels y. View More. Gradient Boosting can use a wide range of base learners, such as decision trees, and linear models.

Today I read on this question much.

Certainly. And I have faced it. Let's discuss this question. Here or in PM.

It is excellent idea