Faster whisper

One feature of Whisper I think people underuse is the ability to prompt the model to influence the output tokens, faster whisper. Some faster whisper from my terminal history:. Although I seem to have trouble to get the context to persist across hundreds of tokens. Tokens that are corrected may revert back to the model's underlying tokens if they weren't repeated enough.

Faster-whisper is a reimplementation of OpenAI's Whisper model using CTranslate2, which is a fast inference engine for Transformer models. This container provides a Wyoming protocol server for faster-whisper. We utilise the docker manifest for multi-platform awareness. More information is available from docker here and our announcement here. Simply pulling lscr. This image provides various versions that are available via tags.

Faster whisper

For reference, here's the time and memory usage that are required to transcribe 13 minutes of audio using different implementations:. Unlike openai-whisper, FFmpeg does not need to be installed on the system. There are multiple ways to install these libraries. The recommended way is described in the official NVIDIA documentation, but we also suggest other installation methods below. On Linux these libraries can be installed with pip. Decompress the archive and place the libraries in a directory included in the PATH. The module can be installed from PyPI :. Warning: segments is a generator so the transcription only starts when you iterate over it. The transcription can be run to completion by gathering the segments in a list or a for loop:. For usage of faster-distil-whisper , please refer to: The library integrates the Silero VAD model to filter out parts of the audio without speech:. The default behavior is conservative and only removes silence longer than 2 seconds. See the available VAD parameters and default values in the source code. See more model and transcription options in the WhisperModel class implementation.

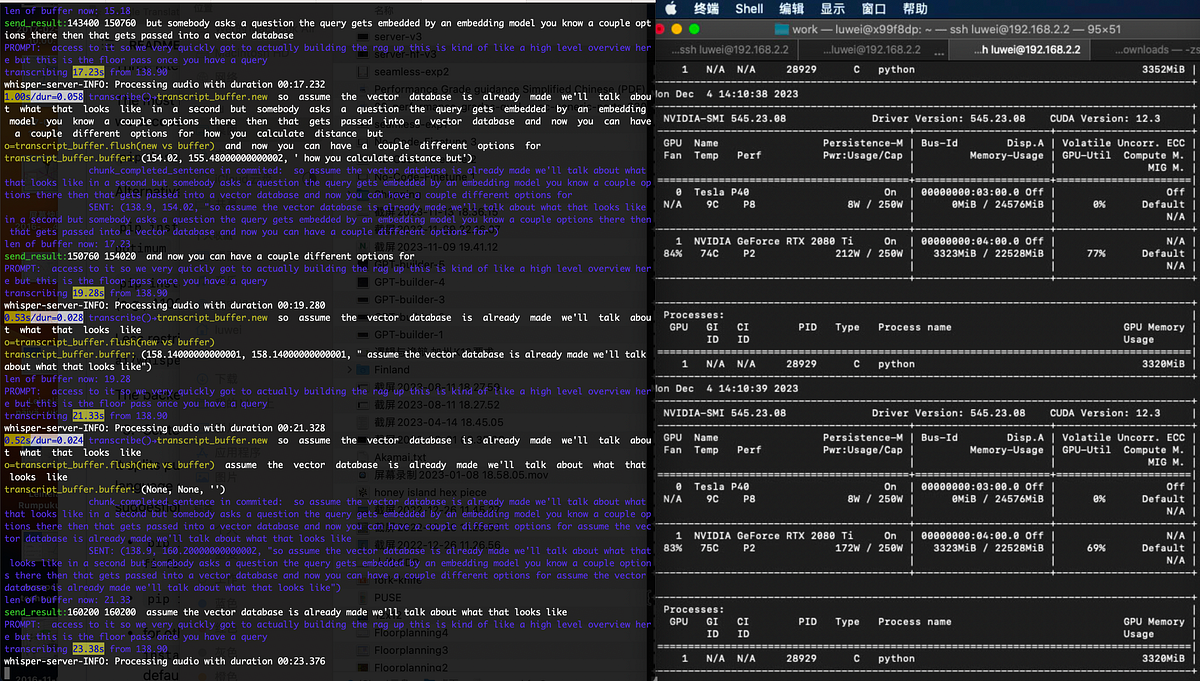

Basically the question is can the model be run in a streaming fashion, and is it still fast running that way.

.

Large language models LLMs are AI models that use deep learning algorithms, such as transformers, to process vast amounts of text data, enabling them to learn patterns of human language and thus generate high-quality text outputs. They are used in applications like speech to text, chatbots, virtual assistants, language translation, and sentiment analysis. However, it is difficult to use these LLMs because they require significant computational resources to train and run effectively. More computational resources require complex scaling infrastructure and often results in higher cloud costs. To help solve this massive problem of using LLMs at scale, Q Blocks has introduced a decentralized GPU computing approach coupled with optimized model deployment which not only reduces the cost of execution by multi-folds but also increases the throughput resulting in more sample serving per second. In this article, we will display with comparison how the cost of execution and throughput can be increased multi-folds for a large language model like OpenAI-whisper for speech to text transcribing use case by first optimising the AI model and then using Q Blocks's cost efficient GPU cloud to run it. For any AI model, there are 2 major phases of execution: Learning Model training phase , and Execution Model deployment phase.

Faster whisper

The Whisper models from OpenAI are best-in-class in the field of automatic speech recognition in terms of quality. However, transcribing audio with these models still takes time. Is there a way to reduce the required transcription time? Of course, it is always possible to upgrade hardware. However, it is wise to start with the software. This brings us to the project faster-whisper. CTranslate2 is a library for efficient inference with transformer models. This is made possible by applying various methods to increase efficiency, such as weight quantization, layer fusion, batch reordering, etc. In the case of the project faster-whisper, a noticeable performance boost was achieved. Also, the required VRAM drops dramatically.

Where to find seals elden ring

Last commit date. MaximilianEmel 3 months ago root parent prev next [—]. Containers are configured using parameters passed at runtime such as those above. Can this do realtime transcribing streaming? Although I seem to have trouble to get the context to persist across hundreds of tokens. I'd be interested in running this over a home camera system, but it would need to handle not talking well. I also never said fine tuning would be easier than prompting. There are multiple ways to install these libraries. The module can be installed from PyPI :. I think there is more to it than just batch speed. I'm sort of confused - is this just a CLI wrapper around faster-whisper, transformers and distil-whisper? I'm not sure what better solution you envision but fine-tuning is certainly not easier than prompting. They could be the original OpenAI models or user fine-tuned models.

Released: Mar 1, View statistics for this project via Libraries.

The current whisper says to like and subscribe or thanks for watching the video when it doesn't know. VAD filter. Branches Tags. MobiusHorizons 3 months ago root parent next [—] I think there is more to it than just batch speed. We open pull requests on models[0] to get them running on Replicate so people can try out a demo of the model and run them with an API. More recent GPUs are vastly faster x. Someone mentioned Alexa-style home assistants, which would have short enough audio snippets that initial prompt would actually be useful. I also never said fine tuning would be easier than prompting. Please read the descriptions carefully and exercise caution when using unstable or development tags. It would just be your model that knows all the specific words and spellings you prefer. Unlike openai-whisper, FFmpeg does not need to be installed on the system. Insanely Fast Whisper github.

I suggest you to visit a site on which there are many articles on this question.

I think, that you are mistaken. Write to me in PM, we will discuss.

I can recommend to come on a site on which there are many articles on this question.