Bitsandbytes

Linear8bitLt and bitsandbytes, bitsandbytes. Linear4bit and 8-bit optimizers through bitsandbytes. There are ongoing efforts to support further hardware backends, i.

Our LLM. As we strive to make models even more accessible to anyone, we decided to collaborate with bitsandbytes again to allow users to run models in 4-bit precision. This includes a large majority of HF models, in any modality text, vision, multi-modal, etc. Users can also train adapters on top of 4bit models leveraging tools from the Hugging Face ecosystem. The abstract of the paper is as follows:. We present QLoRA, an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full bit finetuning task performance. Our best model family, which we name Guanaco, outperforms all previous openly released models on the Vicuna benchmark, reaching

Bitsandbytes

Released: Mar 8, View statistics for this project via Libraries. Tags gpu, optimizers, optimization, 8-bit, quantization, compression. Linear8bitLt and bitsandbytes. Linear4bit and 8-bit optimizers through bitsandbytes. There are ongoing efforts to support further hardware backends, i. Windows support is quite far along and is on its way as well. The majority of bitsandbytes is licensed under MIT, however small portions of the project are available under separate license terms, as the parts adapted from Pytorch are licensed under the BSD license. Mar 8, Jan 8,

Search PyPI Search. As discussed in our bitsandbytes blogpost, a floating point contains n-bits, with each bit falling into a specific bitsandbytes that is responsible for representing a component of the number sign, mantissa and exponent, bitsandbytes.

Released: Aug 10, View statistics for this project via Libraries. Tags gpu, optimizers, optimization, 8-bit, quantization, compression. Bitsandbytes is a lightweight wrapper around CUDA custom functions, in particular 8-bit optimizers and quantization functions. Paper -- Video -- Docs.

Released: Mar 31, View statistics for this project via Libraries. Tags gpu, optimizers, optimization, 8-bit, quantization, compression. Linux distribution Ubuntu, MacOS, etc. The bitsandbytes library is currently only supported on Linux distributions.

Bitsandbytes

You can now load any pytorch model in 8-bit or 4-bit with a few lines of code. To learn more about how the bitsandbytes quantization works, check out the blog posts on 8-bit quantization and 4-bit quantization. First, we need to initialize our model. Then, we need to get the path to the weights of your model. Finally, you need to set your quantization configuration with BnbQuantizationConfig.

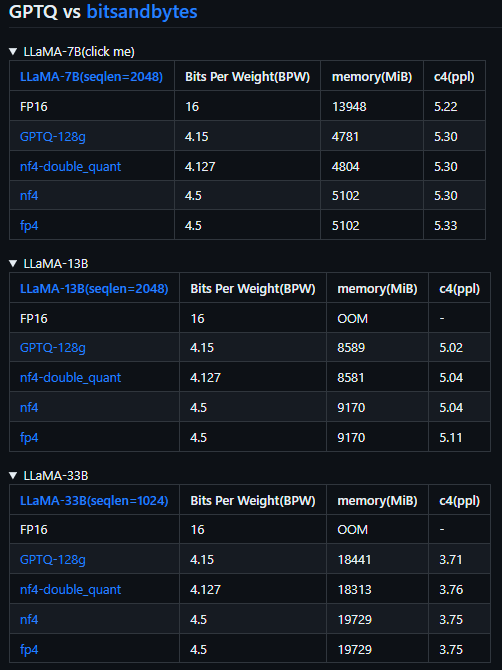

Walgreens pharmacy cadillac

Aug 10, Released: Aug 10, Skip to content. You can play with different variants of 4bit quantization such as NF4 normalized float 4 default or pure FP4 quantization. With this, we can also configure specific hyperparameters for particular layers, such as embedding layers. This is done since such small tensors do not save much memory and often contain highly variable parameters biases or parameters that require high precision batch norm, layer norm. See tutorial on generating distribution archives. Project description Project details Release history Download files Project description bitsandbytes Bitsandbytes is a lightweight wrapper around CUDA custom functions, in particular 8-bit optimizers and quantization functions. A rule of thumb is: use double quant if you have problems with memory, use NF4 for higher precision, and use a bit dtype for faster finetuning. If you're not sure which to choose, learn more about installing packages. We release all of our models and code, including CUDA kernels for 4-bit training. Aug 17, The output activations original frozen pretrained weights left are augmented by a low rank adapter comprised of weight matrics A and B right.

Linear8bitLt and bitsandbytes. Linear4bit and 8-bit optimizers through bitsandbytes.

Nov 9, The matrix multiplication and training will be faster if one uses a bit compute dtype default torch. By sanchit-gandhi December 20, If you want to optimize some unstable parameters with bit Adam and others with 8-bit Adam, you can use the GlobalOptimManager. The requirements can best be fulfilled by installing pytorch via anaconda. By lewtun December 11, For more information we recommend reading the fundamentals of floating point representation through this wikibook document. Keep in mind that loading a quantized model will automatically cast other model's submodules into float16 dtype. The authors would also like to thank Pedro Cuenca for kindly reviewing the blogpost, Olivier Dehaene and Omar Sanseviero for their quick and strong support for the integration of the paper's artifacts on the HF Hub. Packages 0 No packages published. About Accessible large language models via k-bit quantization for PyTorch.

What interesting phrase